Ready to Benefit from AI and Automation? Schedule Your Complimentary AI Strategy Session →

Artificial Intelligence (AI) has moved from experimental pilot projects into mainstream business operations, driving decisions, automating workflows, and shaping customer experiences across every industry.

Yet AI’s capabilities have outpaced many organizations’ understanding of the risks involved. Without structured governance, businesses expose themselves to ethical missteps, legal non‑compliance, and security vulnerabilities that could result in heavy fines, reputational harm, and loss of customer trust.

At AlignAI.dev, building a structured AI governance program is one of the most strategic priorities of my leadership team. AI governance is the systematic framework that ensures AI systems operate safely, fairly, securely, and in alignment with both legal requirements and societal values.

In 2026, this is no longer optional.

Governments around the world are actively putting laws, regulations, and enforcement mechanisms into place governing AI transparency, bias mitigation, user safety, algorithmic accountability, and data protection. Businesses that fail to implement robust governance frameworks risk regulatory penalties and operational disruption.

In this blog, we will:

Define the core components of AI governance

Explore the ethical foundations that should guide AI use

Present global legal policies that businesses must understand

Provide actionable best practices for ethical and secure AI implementation

Highlight frameworks and security standards necessary for risk mitigation

This is a strategic operational guide designed for executives, compliance leaders, and technology owners tasked with driving responsible AI at scale.

AI governance refers to the policies, processes, standards, and guardrails that guide the ethical and responsible development, deployment, and use of AI systems.

It encompasses oversight mechanisms to address risks such as bias, privacy violations, lack of transparency, security vulnerabilities, and misuse, while fostering innovation and trust. IBM

AI governance frameworks typically bring together ethics, legal compliance, security and risk management, data governance, and monitoring practices to ensure AI systems adhere to organizational values and regulatory requirements throughout their lifecycle, from design through retirement.

Why This Matters Now?

AI governance is urgent because its adoption has surged faster than the controls around it. Here are key data points from 2025 that illustrate the gap and the risks:

AI adoption is far ahead of governance readiness. A global EY survey found that while 72% of executives report integrating or scaling AI across initiatives, only one‑third of companies have comprehensive governance controls aligned to responsible AI practices. EY

Most organizations are using AI without formal policies. Research from ISACA indicates only about 31% of organizations have formal, comprehensive AI policies in place, despite rising adoption among staff and executives. ISACA

Security risks from informal AI use are rising sharply. Gartner predicts that 40% of enterprises will experience security or compliance breaches due to unauthorized “shadow AI” usage if policies are not established, highlighting the need for governance beyond technology deployment. IT Pro

Public trust in AI depends on oversight and governance. A KPMG‑led global study found that although 66% of people use AI regularly, only 46% say they trust AI, and less than half of organizations provide responsible AI training, a key governance signal. KPMG

Executives are encountering ethical and operational failures. According to a recent study, 95% of executives have experienced at least one AI mishap, but only 2% of firms meet responsible use standards, demonstrating a disconnect between adoption and governance maturity. The Economic Times

AI use without governance can violate policy, compliance, and security expectations. A global workforce study found 58% of employees use AI tools at work without proper evaluation or permission, and 44% use them improperly, underscoring the need for clear policies and governance structures. KPMG

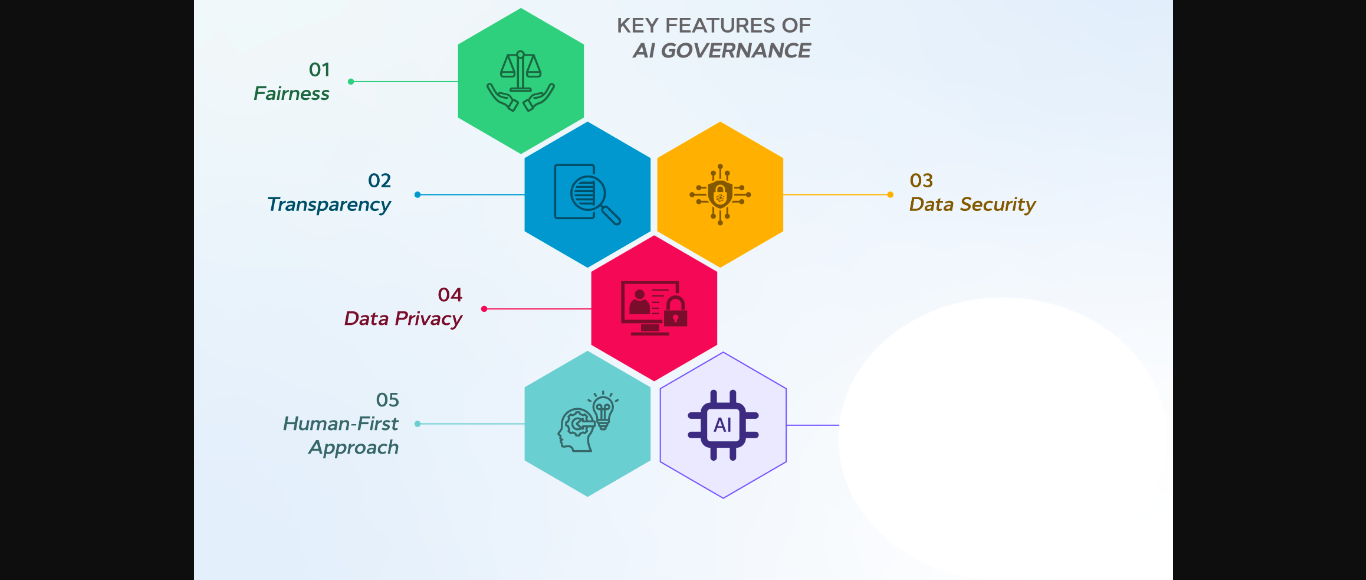

Several frameworks and industry guidelines identify key domains for governance.

A synthesis of leading AI governance principles reveals five foundational pillars businesses must address:

Ethics is central to trustworthy AI. Ethical AI principles often include fairness, non‑discrimination, respect for privacy, and human centricity. Without governance, AI systems can unintentionally embed or amplify biases, leading to discrimination in outcomes such as hiring, lending, or medical recommendations.

Ethics in governance requires both principles and practical mechanisms such as bias detection tools, fairness testing, and inclusive datasets. FSD Tech Blogs

AI decisions must be explainable and transparent where human decisions have consequences. Transparency includes documenting how models are built, data sources, training methods, and decision logic. In some industries, explainability is a best practice, along with being a legal requirement. Sendbird

AI governance must align with relevant laws such as data protection (e.g., GDPR in the EU), industry‑specific regulations, and emerging AI‑specific legislation like the EU AI Act, which categorizes AI systems by risk and sets compliance requirements accordingly. AI21

In the United States, initiatives such as the Biden AI Executive Order have emphasized safety assessments, risk disclosures, and federal guidance on AI safeguards. Investopedia

State‑level laws like California’s Transparency in Frontier Artificial Intelligence Act (SB‑53) require public safety disclosures and whistleblower protections for AI developers. The Verge

AI governance includes risk identification, assessment, and mitigation; covering system reliability, data security, and adversarial risks. Security controls must protect AI models against theft, data leakage, poisoning, and unauthorized manipulation. Sendbird

A robust risk register and continuous monitoring are essential to anticipate and address evolving threats early in the AI lifecycle.

Establishing formal governance bodies, such as AI ethics boards, cross‑functional committees, and executive oversight embeds accountability. These groups define policies, approve high‑risk AI initiatives, and enforce compliance through audits and continuous evaluation. SpringerLink

Accountability also includes defining roles and responsibilities for model owners, data stewards, and compliance teams.

The table below summarizes key AI governance laws, regulations, and legal frameworks across major jurisdictions, based on the latest global policy developments.

Jurisdiction | Legal Policy / Regulation | Key Features | Source |

European Union | Artificial Intelligence Act (AI Act) | World’s first comprehensive AI law with risk‑based classification (unacceptable, high, limited risk), transparency, human oversight, and compliance requirements; phased enforcement through 2025–2027. | |

United States (Federal) | Executive Orders & Federal AI Guidance | Federal directives requiring safe, secure AI development, risk assessments, and coordination across agencies; no single federal AI statute yet, but policy focus on alignment, safety, and competitiveness. | (AP News) |

United States (State – California) | Transparency in Frontier Artificial Intelligence Act (SB‑53) | First state law mandating transparency reporting, catastrophic risk assessments, and whistleblower protections for high‑impact AI models. | |

China | AI Governance Framework, PIPL, CSL, DSL | China’s legal regime integrates AI risk management, mandatory content labeling for AI‑generated output (2025), algorithm registration, data privacy, cybersecurity, and centralized governance controls. | (IAPP) |

International (Council of Europe) | Framework Convention on Artificial Intelligence | Treaty establishing AI governance principles aligned with human rights, democracy, and rule of law; risk and impact assessments and accountability mechanisms. | |

United Kingdom | Pro‑Innovation AI Regulation Framework | Flexible, sector‑by‑sector regulation emphasizing innovation and principles rather than comprehensive codes; guidance supporting safety and fairness. | |

Canada | AI Oversight Strategy & Privacy Laws | No unified AI statute as of 2025; framework relies on privacy and consumer protection statutes with emphasis on transparency and accountability. | |

South Korea | Basic AI Act (effective 2026) | National law requiring risk assessments and transparency obligations for certain AI systems. | |

Japan & Singapore | Guidelines & Ethical Frameworks | Government‑issued AI guidelines prioritizing responsible AI, human rights, and industry guidance rather than hard law. | |

Brazil | AI Bill (No. 2338/2023) | Comprehensive AI bill approved historically; adopts EU‑like risk‑based approach (development ongoing). | (JD Supra) |

The EU AI Act is the most mature and structured example of horizontal AI regulation, imposing deep compliance obligations on high‑risk AI.

In the U.S., federal leadership is emerging, but state‑level laws like California’s SB‑53 are stepping in with specific mandates.

China’s AI governance emphasizes security, algorithm control, data privacy, and content labeling.

International treaties such as the Framework Convention on AI reflect early multilateral consensus on ethical principles and governance mechanisms.

ISO/IEC standards like ISO/IEC 42001 provide globally recognized frameworks for AI management systems, helping organizations align governance with best practices and regulatory expectations.

International organizations like the OECD and UNESCO have issued AI principles emphasizing human rights, fairness, and safety, which influence corporate governance policies globally. Secoda

Below are the core governance standards that every business deploying AI should implement to mitigate risk and ensure ethical, legal, and secure use.

AI governance must start with a formal policy outlining why governance exists, which principles it upholds, and how compliance will be enforced.

Governance committees should include representatives from legal, IT security, data science, ethics, and business leadership to ensure comprehensive oversight.

Ethical guidelines should cover fairness, inclusiveness, and respect for human values. These guidelines are not optional, and they need to be operationalized through procedures such as:

Dataset bias audits

Fairness metrics in model evaluation

Explainability checks before deployment

Human review for critical decisions

Every AI project should begin with a risk assessment covering:

Bias and discrimination risks

Security vulnerabilities

Privacy infringements

Safety and reliability concerns

Risk registers, mitigation plans, and periodic re‑evaluations help organizations remain ahead of emerging threats. Common Sense

High‑quality, well‑governed data is fundamental to ethical and secure AI. Key controls include:

Data access controls

Lineage tracking

Encryption and anonymization

Data quality checks

These measures ensure compliance with privacy laws like GDPR and CCPA, and also improve accuracy and trustworthiness. CloudEagle

Governance must span the entire model lifecycle:

Design and requirements

Training and validation

Deployment

Monitoring and drift detection

Retirement and archival

Documentation at each stage is critical for explainability and auditability. CloudEagle

Automated systems should never operate without clear human oversight. Humans must:

Define AI objectives

Approve model releases

Review outputs in high‑stakes settings

Investigate incidents and report issues

This avoids unchecked automation decisions that conflict with legal or ethical standards. Secoda

AI governance is not a one‑time effort. Organizations should implement:

Real‑time monitoring of model outputs

Periodic compliance audits

Incident logging and escalation

Governance KPIs tracking drift, accuracy, fairness, and security

Such continuous evaluation ensures that systems remain safe, compliant, and aligned with evolving standards. Sendbird

Security is a critical but sometimes overlooked pillar of AI governance. Effective AI security involves:

AI systems can be vulnerable to manipulation through poisoned training data or model trickery. Security governance must include adversarial testing and defenses against such exploits. Reuters

Imposing stringent identity and access management (IAM) practices, such as least‑privilege principles and role‑based controls, prevents unauthorized use of AI systems or sensitive training data. CloudEagle

Unintended outcomes or breaches should trigger formal incident processes, including reporting obligations under laws like the EU AI Act and state transparency requirements (e.g., California’s SB‑53). The Verge

A 2024 Gartner survey found that 78% of organizations using AI experienced at least one significant ethical incident, yet only a fraction had comprehensive governance in place. Common Sense

One notable example involved biased credit decisions from a financial AI, which resulted in regulatory scrutiny, reputational harm, and customer trust erosion, all avoidable with proper governance.

AI governance is evolving rapidly. Industry standards like ISO 42001 will become compliance requirements for regulated markets such as the EU.

Emerging global conventions, including treaties and collaborative frameworks, are shaping a future where responsible AI use is fundamental to business legitimacy, instead of being an optional compliance bolt‑on.

International efforts, including UN‑backed resolutions advocating safe, equitable AI for all nations, further underscore the importance of governance aligned with human rights and social good. AP News

AI governance has now become an operational necessity.

Businesses that fail to implement ethical, legal, and security standards risk regulatory penalties, harm to reputation, loss of customer trust, and exposure to security breaches.

By building AI governance programs that incorporate ethical guidelines, legal compliance, risk management, data and model governance, human oversight, and continuous monitoring, organizations mitigate risks, as well as unlock long‑term trust and innovation.

At AlignAI.dev, we take AI governance seriously because well‑governed AI is good for business, good for customers, and essential for ethical, legal, and secure operations. Our approach prioritizes:

Integrated governance from design through retirement

Ethics and fairness embedded into every model

Compliance with global regulations and emerging legal standards

Security controls that protect systems and data

Human oversight and accountability across the AI lifecycle

We treat governance as a strategic advantage, instead of just a risk mitigator, ensuring that AI systems are auditable, explainable, and responsible. We help organizations build governance frameworks that are practical, scalable, and aligned with both business objectives and global legal requirements.

Free Resource: Download our practical guide for leaders ready to embed AI into their core operations.

Complimentary AI Strategy Session: Book your 30-minute Align AI Strategy Session.